see the details at https://stackoverflow.com/questions/6972635/efficient-string-sorting-algorithm/71349193#71349193

The Bizarreness of Optimization

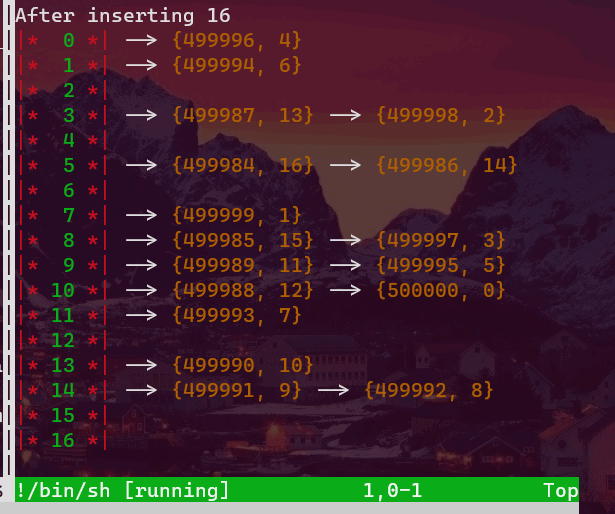

Background: consider the hashtable with closed addressing/separate chaining:

Typically, we can have two different schemes to link through all the nodes. One is to link the last node of the current bucket to the first node of the next non-empty bucket. See the above picture and imagine taht there are links among these buckets. Another is to make all the nodes look exactly the same as the above picture (by which I mean to link the tail nodes with null pointers).

The second approach will have some more work to obtain the successor of the current node. It may need to scan a lot of (empty) buckets to get its successor. Sounds awful, huh? Actually not. By amortized analysis (assume the max_load_factor==1 ), ideally, we will have each node in a (different) bucket exactly. So it can be efficient.

How about the first one? The bad news is that it can suffer from very terrible insertion overhead. Now imagine you have a very big hashtable, tab[i] and tab[j] are two adjacent (non-empty) buckets. The horrible thing is that you have a very big difference |i-j|, and then an item whose hash code k is between i and j is needed to be inserted there. We will need to scan all the way down from k to j in order to update the links of the three nodes: the last node of bucket i, the newly inserted node, the first node of bucket j (you can think of this process as the insertion of a doubly-linked list). And if we constantly perform this pathological operation, j-1, i+1, j-2, i+2,..., things can get really bad, bad, bad, bad.

The Problem: Now if I ask you a simple question, after building a hashtable, we iterate through all the elements, which do you think is faster, the all-linked-up one (1st case) or the only-in-bucket-linked one (2nd case)?

Here is a piece of test code and the whole code can be found here.

int main()

{

#if defined(USE_HASHMAP)

mySymbolTable::HashMap<int, int> mp{};

#elif defined(USE_HASHMAP2)

mySymbolTable::alternative::HashMap<int, int> mp{};

#else

std::unordered_map<int, int> mp{};

#endif

constexpr int N = 1'000'000;

int k = 0;

for(int i=0; i < N; ++i)

mp[N-i] = i;

auto t1 = std::clock();

for(auto it=mp.begin(); it!=mp.end(); ++it)

++k;

auto t2= std::clock();

auto iteration_time_elapsed = (t2 - t1) / (double)1'000;

std::cout << "Iterating through " << k << " items used "

<< iteration_time_elapsed << "ms\n";

return 0;

}

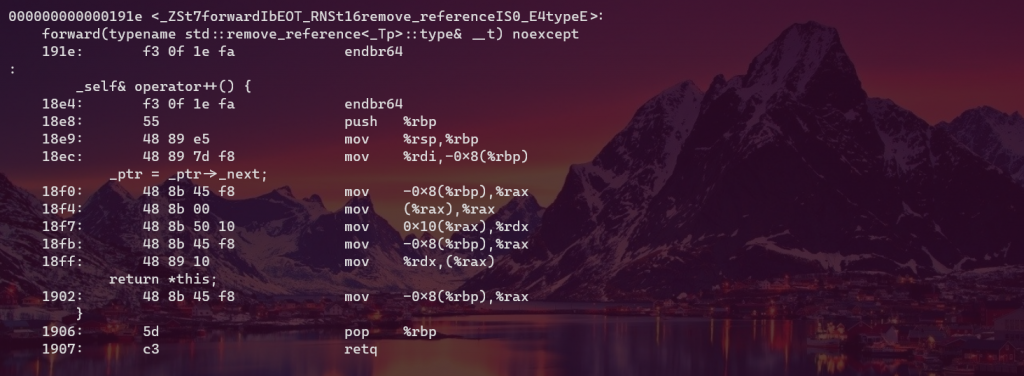

Essentially, they run the following code respectively

_self& operator++() {

_ptr = _ptr->_next;

return *this;

}

vs

_self& operator++() {

auto next = _table->next_entry(_ptr, _bucket_index);

_ptr = next.first;

_bucket_index = next.second;

return *this;

}

std::pair<node_ptr, size_t> next_entry(node_ptr x, size_t bucket_index) const {

assert(x != nullptr && "cannot increment end() iterator");

if (x->_next) return { x->_next, bucket_index };

size_t i = bucket_index + 1;

for (; i < _hashtable.size(); ++i) {

if (_hashtable[i]) return { _hashtable[i], i };

}

return { nullptr, i };

}

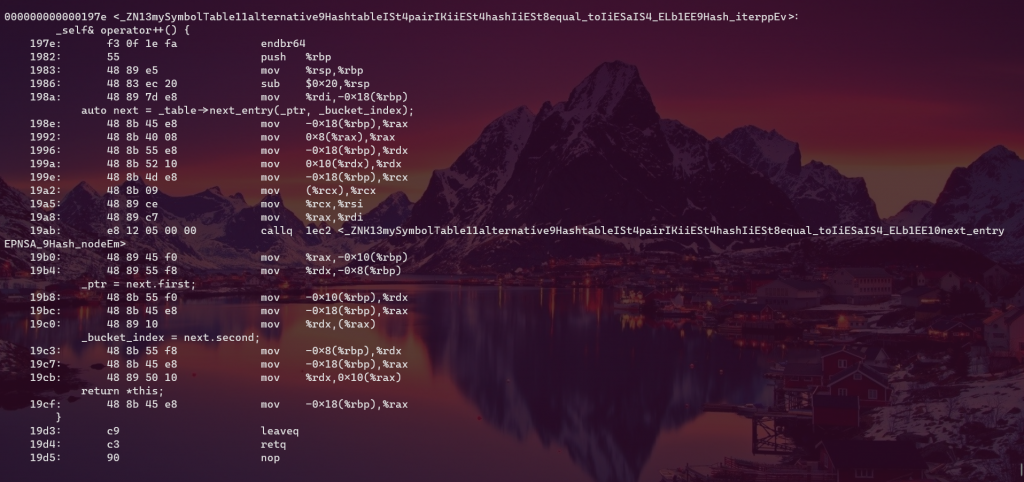

So, which one? (I think it should the first one, any rational human beings would make this choice, I suppose. Don’t believe it??)

Now have a look at their assembly code

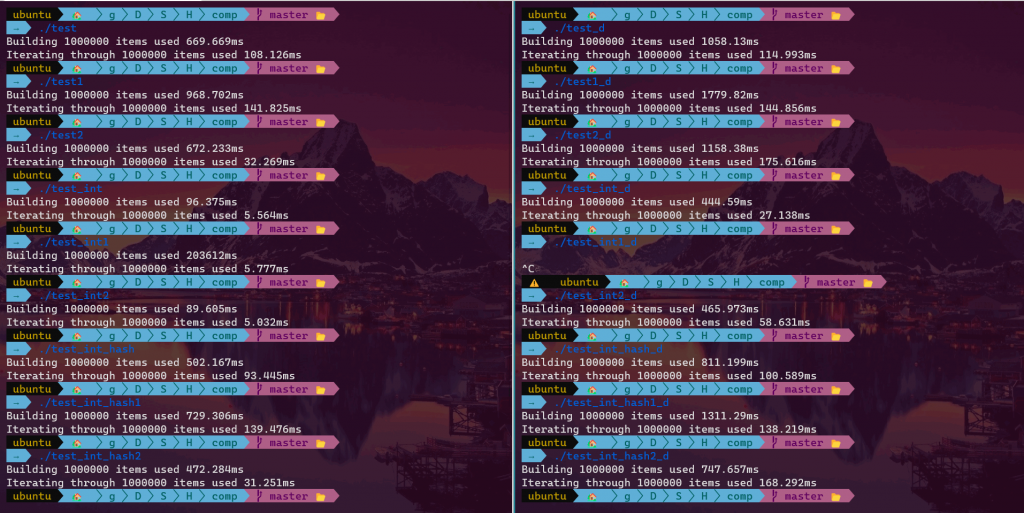

The bizarre thing is that in debug mode (-g flag), the 1st case beats the 2nd by about one fifth (t1 = 82% * t2 ). While if optimization is enabled (-O3 flag), it’s a whole different story. The summary table is given below.

Here, _d suffix means debug version, *1 suffix means myst::HashMap (1st case), *2 suffix means myst::alternative::HashMap (2nd case), and no suffix suggests we are using std::unordered_map.

We can see from the results that optimization -O3 barely boosts the performance of the 1st case (std::unordered_map and myst::HashMap) except the test_int cases, but rather make a significant boost in the 2nd case (myst::alternative::HashMap). Given the 2nd is a little bit more complicated, it is therefore expected there will be more space for the 2nd case to optimize, for example, inlining. Still, I don’t think it can beat the 1st small code. How do we explain this weird phenomenon?

(In my understanding, small code means fast, think about cache, and technically speaking, 2nd case should never be faster than the 1st one.)

How To Implement A Real Emplace Routine

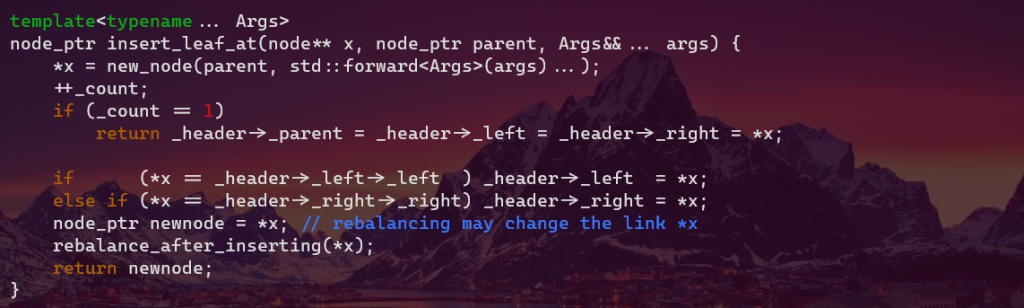

Above is a piece of code that attempts to implement the emplace family routines. One can insert something like const value_type& (value_type&&) val or variadic arguments Args&&... args, both of which then can be handled by the insert_leaf_at subroutine

Of course, this simple implementation is flawed as it requires an extra move operation. In order to obtain the key from Args&&... args, we need to somehow get the value itself which can be a value (set) or a pair of <key, val> values (map). This is done by using the compile-time template bool constant/parameter IsMap. If we must construct the actual object within the method insert_at_leaf, then, well, an extra move operation is inevitable.

The solution to the question is we construct the node in-place, thus the name empalce ;) We first construct the node in the heap using the variadic arguments node_ptr z = new_node(/*parent=*/nullptr, std::forward<Args>(args)...); , then get_key from the node by reference. And what is left is we walk through the tree and find the right position to insert the node and reset the parent link. Tada🎉, there you are. This makes even more sense if we look at the C++ specification where node_type and node_handle were introduced in C++17. Their presence makes implementing emplace more easily and naturally.

But the C++ folks are soooo ambitious and if the original one has so many combined benefits (simplicity, flexibility, versatility, etc.) and actually works pretty well (given the overhead of an extra move operation is negligible), we can just keep the way it is :)

Ref: A C++17 compliant AVL map container.

Innovation

Happy Happy Birthday

So, happy happy birthday to a marvelous friend, Ania;)

A Running Example

#include <stdio.h>

int add(int a, int b)

{

int c = a+b;

printf("@add(): &a=%p, &b=%p\n", &a, &b);

return c;

}

int main()

{

int i = 3;

int j = 4;

int k = add(i,j);

printf("@main(): &i=%p, &j=%p, &k=%p\n", &i, &j, &k);

return k;

}

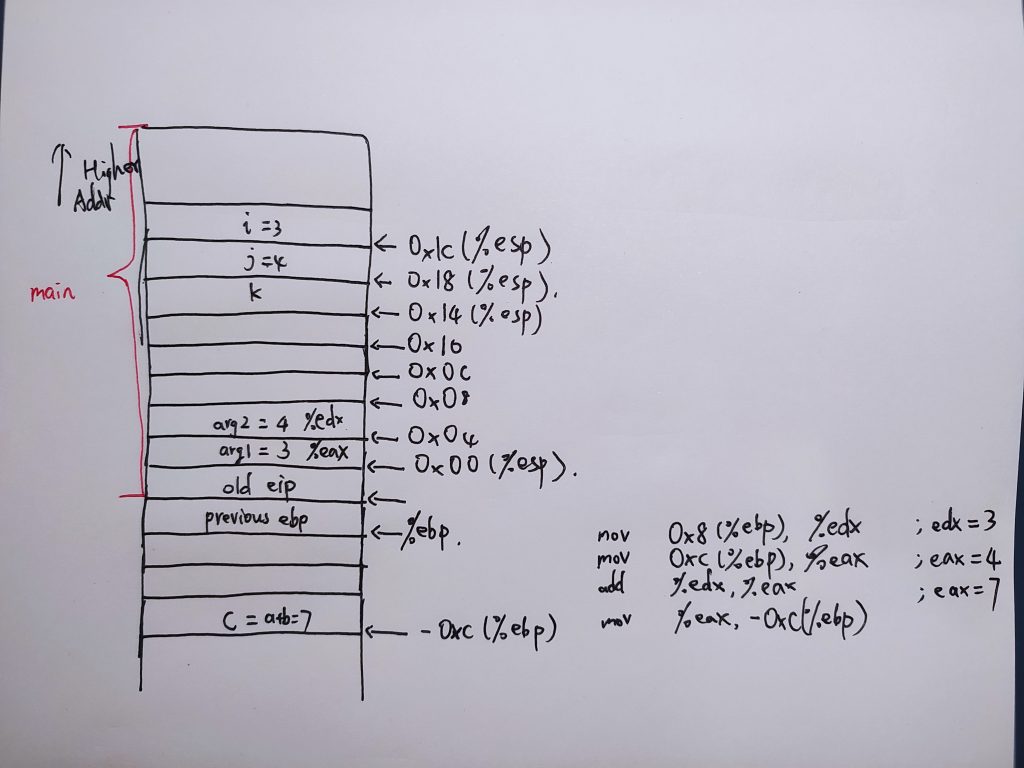

I made a test in Dev-C++ (version 5.7.1, with MinGW GCC 4.8.1 32-bit), and in debug mode, via the CPU Window it produced the following instructions:

procedure main:

0x004016e0 <+0>: push %ebp 0x004016e1 <+1>: mov %esp,%ebp 0x004016e3 <+3>: and $0xfffffff0,%esp 0x004016e6 <+6>: sub $0x20,%esp 0x004016e9 <+9>: call 0x401cc0 <__main> => 0x004016ee <+14>: movl $0x3,0x1c(%esp) 0x004016f6 <+22>: movl $0x4,0x18(%esp) 0x004016fe <+30>: mov 0x18(%esp),%edx 0x00401702 <+34>: mov 0x1c(%esp),%eax 0x00401706 <+38>: mov %edx,0x4(%esp) 0x0040170a <+42>: mov %eax,(%esp) 0x0040170d <+45>: call 0x4016b0 <add> 0x00401712 <+50>: mov %eax,0x14(%esp) 0x00401716 <+54>: lea 0x14(%esp),%eax 0x0040171a <+58>: mov %eax,0xc(%esp) 0x0040171e <+62>: lea 0x18(%esp),%eax 0x00401722 <+66>: mov %eax,0x8(%esp) 0x00401726 <+70>: lea 0x1c(%esp),%eax 0x0040172a <+74>: mov %eax,0x4(%esp) 0x0040172e <+78>: movl $0x40507a,(%esp) 0x00401735 <+85>: call 0x4036c8 <printf> 0x0040173a <+90>: mov 0x14(%esp),%eax 0x0040173e <+94>: leave 0x0040173f <+95>: ret

subroutine add:

0x004016b0 <+0>: push %ebp 0x004016b1 <+1>: mov %esp,%ebp 0x004016b3 <+3>: sub $0x28,%esp 0x004016b6 <+6>: mov 0x8(%ebp),%edx 0x004016b9 <+9>: mov 0xc(%ebp),%eax 0x004016bc <+12>: add %edx,%eax 0x004016be <+14>: mov %eax,-0xc(%ebp) 0x004016c1 <+17>: lea 0xc(%ebp),%eax 0x004016c4 <+20>: mov %eax,0x8(%esp) 0x004016c8 <+24>: lea 0x8(%ebp),%eax 0x004016cb <+27>: mov %eax,0x4(%esp) 0x004016cf <+31>: movl $0x405064,(%esp) 0x004016d6 <+38>: call 0x4036c8 <printf> 0x004016db <+43>: mov -0xc(%ebp),%eax 0x004016de <+46>: leave 0x004016df <+47>: ret

Following these instructions, I drew a simple diagram (partial, incomplete):

The arguments for printf were left out in this diagram for simplicity, but it is not difficult to imagine the whole running process with the aid of the above instructions. The calling convention, however, can be different from another compiler. For example, on my Ubuntu 20.04.1 LTS server with GCC 9.3.0, it yields something like

@add(): &a=0x7ffdf0f69a3c, &b=0x7ffdf0f69a38 @main(): &i=0x7ffdf0f69a6c, &j=0x7ffdf0f69a70, &k=0x7ffdf0f69a74

which is completely the opposite of the above diagram: the passed arguments were arranged in decreasing addresses, and the local vars were placed in increasing addresses. For local variables, the compiler knows in advance how much space should be allocated in the stack by looking at its symbol table. Then it allocates enough space by subtracting some value from the RSP register. Thus, it looks like the compiler can place the local vars wherever it likes, it just needs to perform some arithmetics based on the RSP or RBP register. A good explanation for the locations of the passed arguments is, as opposed to storing them on the caller stack, it first copies those arguments to registers (if possible) in reverse order and then saves them to the callee stack near the beginning in argument order (RDI, RSI, RDX, RCX, R8, R9). In this way, the value in the EDI register (1st argument) is first placed into the callee stack and hence it has a higher address. Aha! Now it makes perfect sense! Next, let’s check it out by looking at the disassembly by running gdb a.out -batch -ex 'disassemble/s main' (or add).

Dump of assembler code for function main:

add.c:

11 {

0x00000000000011a7 <+0>: endbr64

0x00000000000011ab <+4>: push %rbp

0x00000000000011ac <+5>: mov %rsp,%rbp

0x00000000000011af <+8>: sub $0x20,%rsp

0x00000000000011b3 <+12>: mov %fs:0x28,%rax

0x00000000000011bc <+21>: mov %rax,-0x8(%rbp)

0x00000000000011c0 <+25>: xor %eax,%eax

12 int i = 3;

0x00000000000011c2 <+27>: movl $0x3,-0x14(%rbp)

13 int j = 4;

0x00000000000011c9 <+34>: movl $0x4,-0x10(%rbp)

14 int k = add(i,j);

0x00000000000011d0 <+41>: mov -0x10(%rbp),%edx

0x00000000000011d3 <+44>: mov -0x14(%rbp),%eax

0x00000000000011d6 <+47>: mov %edx,%esi

0x00000000000011d8 <+49>: mov %eax,%edi

0x00000000000011da <+51>: callq 0x1169 <add>

0x00000000000011df <+56>: mov %eax,-0xc(%rbp)

15 printf("@main(): &i=%p, &j=%p, &k=%p\n", &i, &j, &k);

0x00000000000011e2 <+59>: lea -0xc(%rbp),%rcx

0x00000000000011e6 <+63>: lea -0x10(%rbp),%rdx

0x00000000000011ea <+67>: lea -0x14(%rbp),%rax

0x00000000000011ee <+71>: mov %rax,%rsi

0x00000000000011f1 <+74>: lea 0xe22(%rip),%rdi # 0x201a

0x00000000000011f8 <+81>: mov $0x0,%eax

0x00000000000011fd <+86>: callq 0x1070 <printf@plt>

16 return k;

0x0000000000001202 <+91>: mov -0xc(%rbp),%eax

17 }

0x0000000000001205 <+94>: mov -0x8(%rbp),%rsi

0x0000000000001209 <+98>: xor %fs:0x28,%rsi

0x0000000000001212 <+107>: je 0x1219 <main+114>

0x0000000000001214 <+109>: callq 0x1060 <__stack_chk_fail@plt>

0x0000000000001219 <+114>: leaveq

0x000000000000121a <+115>: retq

End of assembler dump.

Dump of assembler code for function add:

add.c:

4 {

0x0000000000001169 <+0>: endbr64

0x000000000000116d <+4>: push %rbp

0x000000000000116e <+5>: mov %rsp,%rbp

0x0000000000001171 <+8>: sub $0x20,%rsp

0x0000000000001175 <+12>: mov %edi,-0x14(%rbp)

0x0000000000001178 <+15>: mov %esi,-0x18(%rbp)

5 int c = a+b;

0x000000000000117b <+18>: mov -0x14(%rbp),%edx

0x000000000000117e <+21>: mov -0x18(%rbp),%eax

0x0000000000001181 <+24>: add %edx,%eax

0x0000000000001183 <+26>: mov %eax,-0x4(%rbp)

6 printf("@add(): &a=%p, &b=%p\n", &a, &b);

0x0000000000001186 <+29>: lea -0x18(%rbp),%rdx

0x000000000000118a <+33>: lea -0x14(%rbp),%rax

0x000000000000118e <+37>: mov %rax,%rsi

0x0000000000001191 <+40>: lea 0xe6c(%rip),%rdi # 0x2004

0x0000000000001198 <+47>: mov $0x0,%eax

0x000000000000119d <+52>: callq 0x1070 <printf@plt>

7 return c;

0x00000000000011a2 <+57>: mov -0x4(%rbp),%eax

8 }

0x00000000000011a5 <+60>: leaveq

0x00000000000011a6 <+61>: retq

End of assembler dump.

The above assembler code agrees with our speculation. Note, however, the location of the local variable c here. It is above the two arguments in the stack (just one position below the RBP register). If we rewrite the main function as int main(int argc, char** argv), then we can get something like

0x00000000000011a7 <+0>: endbr64 0x00000000000011ab <+4>: push %rbp 0x00000000000011ac <+5>: mov %rsp,%rbp 0x00000000000011af <+8>: sub $0x30,%rsp 0x00000000000011b3 <+12>: mov %edi,-0x24(%rbp) 0x00000000000011b6 <+15>: mov %rsi,-0x30(%rbp) 0x00000000000011ba <+19>: mov %fs:0x28,%rax 0x00000000000011c3 <+28>: mov %rax,-0x8(%rbp) 0x00000000000011c7 <+32>: xor %eax,%eax

in the setup of the stack of main. We can see that the two passed arguments argc and argv are stored at the stack top via the EDI and RSI registers, respectively.

(Jul 15, 2023)

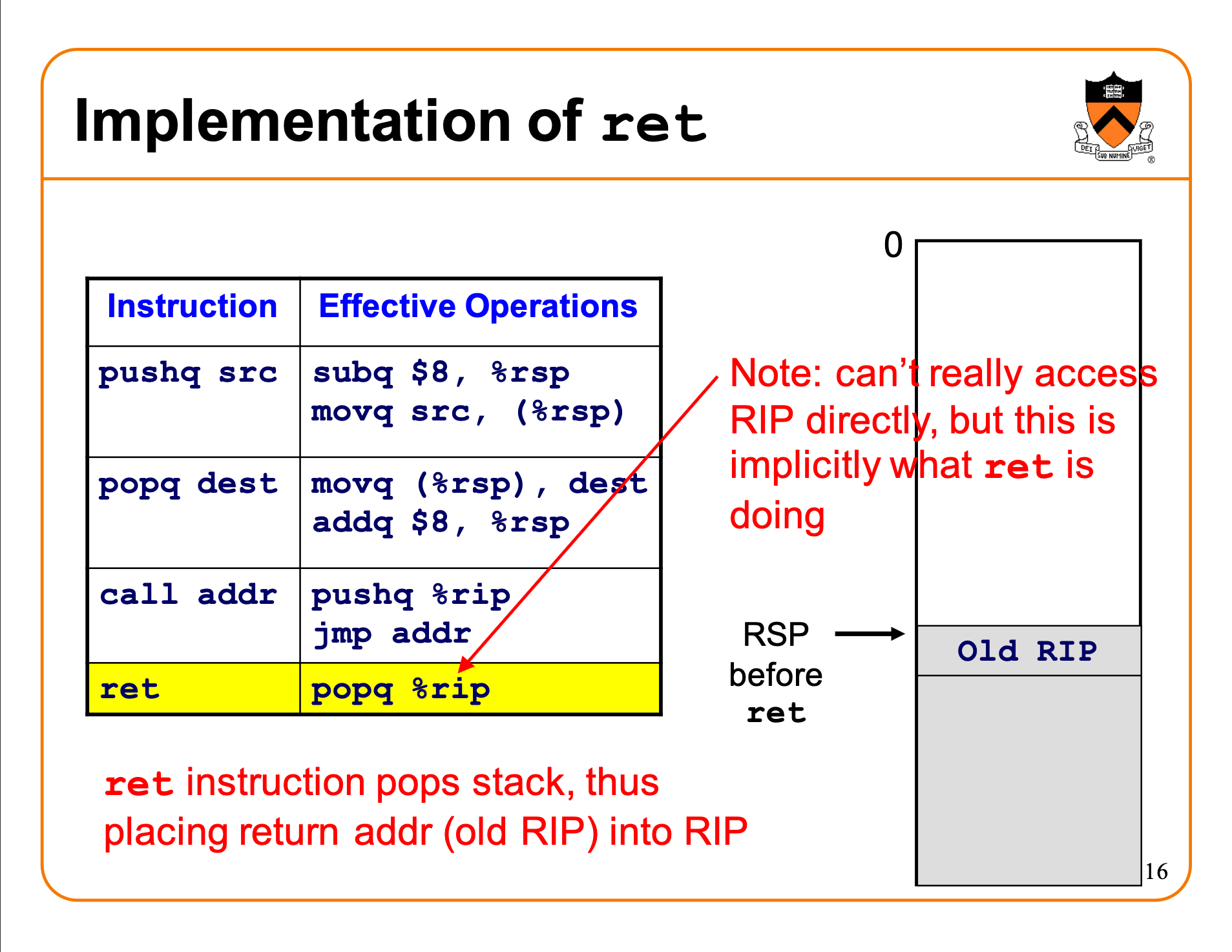

Effective implementations of some x86 instructions (slides from here):

An Interesting C Program

#include <stdio.h>

int main()

{

int i = 3;

int j = 4;

int *p = &j;

*(p+1) = 7;

#if 1

// addr &i is higher than &j

printf("%d, %d\n", i, j); // prints 7, 4

#else

// addr &j is higher than &i

printf("%d, %d\n%p, %p\n", i, j, &i, &j); // prints 3, 4

#endif

return 0;

}

gdb a.out -batch -ex 'disassemble/s main'

Dump of assembler code for function main:

a.c:

4 {

0x0000000000001169 <+0>: endbr64

0x000000000000116d <+4>: push %rbp

0x000000000000116e <+5>: mov %rsp,%rbp

0x0000000000001171 <+8>: sub $0x20,%rsp

0x0000000000001175 <+12>: mov %fs:0x28,%rax

0x000000000000117e <+21>: mov %rax,-0x8(%rbp)

0x0000000000001182 <+25>: xor %eax,%eax

5 int i = 3;

0x0000000000001184 <+27>: movl $0x3,-0x14(%rbp)

6 int j = 4;

0x000000000000118b <+34>: movl $0x4,-0x18(%rbp)

7 int *p = &j;

0x0000000000001192 <+41>: lea -0x18(%rbp),%rax

0x0000000000001196 <+45>: mov %rax,-0x10(%rbp)

8 *(p+1) = 7;

0x000000000000119a <+49>: mov -0x10(%rbp),%rax

0x000000000000119e <+53>: add $0x4,%rax

0x00000000000011a2 <+57>: movl $0x7,(%rax)

9 #if 1

10 // addr &i is higher than &j

11 printf("%d, %d\n", i, j); // prints 7, 4

0x00000000000011a8 <+63>: mov -0x18(%rbp),%edx

0x00000000000011ab <+66>: mov -0x14(%rbp),%eax

0x00000000000011ae <+69>: mov %eax,%esi

0x00000000000011b0 <+71>: lea 0xe4d(%rip),%rdi # 0x2004

0x00000000000011b7 <+78>: mov $0x0,%eax

0x00000000000011bc <+83>: callq 0x1070 <printf@plt>

12 #else

13 // addr &j is higher than &i

14 printf("%d, %d\n%p, %p\n", i, j, &i, &j); // prints 3, 4

15 #endif

16 return 0;

0x00000000000011c1 <+88>: mov $0x0,%eax

17 }

0x00000000000011c6 <+93>: mov -0x8(%rbp),%rcx

0x00000000000011ca <+97>: xor %fs:0x28,%rcx

0x00000000000011d3 <+106>: je 0x11da <main+113>

0x00000000000011d5 <+108>: callq 0x1060 <__stack_chk_fail@plt>

0x00000000000011da <+113>: leaveq

0x00000000000011db <+114>: retq

End of assembler dump.

Dump of assembler code for function main:

a.c:

4 {

0x0000000000001169 <+0>: endbr64

0x000000000000116d <+4>: push %rbp

0x000000000000116e <+5>: mov %rsp,%rbp

0x0000000000001171 <+8>: sub $0x20,%rsp

0x0000000000001175 <+12>: mov %fs:0x28,%rax

0x000000000000117e <+21>: mov %rax,-0x8(%rbp)

0x0000000000001182 <+25>: xor %eax,%eax

5 int i = 3;

0x0000000000001184 <+27>: movl $0x3,-0x18(%rbp)

6 int j = 4;

0x000000000000118b <+34>: movl $0x4,-0x14(%rbp)

7 int *p = &j;

0x0000000000001192 <+41>: lea -0x14(%rbp),%rax

0x0000000000001196 <+45>: mov %rax,-0x10(%rbp)

8 *(p+1) = 7;

0x000000000000119a <+49>: mov -0x10(%rbp),%rax

0x000000000000119e <+53>: add $0x4,%rax

0x00000000000011a2 <+57>: movl $0x7,(%rax)

9 #if 0

10 // addr &i is higher than &j

11 printf("%d, %d\n", i, j); // prints 7, 4

12 #else

13 // addr &j is higher than &i

14 printf("%d, %d\n%p, %p\n", i, j, &i, &j); // prints 3, 4

0x00000000000011a8 <+63>: mov -0x14(%rbp),%edx

0x00000000000011ab <+66>: mov -0x18(%rbp),%eax

0x00000000000011ae <+69>: lea -0x14(%rbp),%rsi

0x00000000000011b2 <+73>: lea -0x18(%rbp),%rcx

0x00000000000011b6 <+77>: mov %rsi,%r8

0x00000000000011b9 <+80>: mov %eax,%esi

0x00000000000011bb <+82>: lea 0xe42(%rip),%rdi # 0x2004

0x00000000000011c2 <+89>: mov $0x0,%eax

0x00000000000011c7 <+94>: callq 0x1070 <printf@plt>

15 #endif

16 return 0;

0x00000000000011cc <+99>: mov $0x0,%eax

17 }

0x00000000000011d1 <+104>: mov -0x8(%rbp),%rdi

0x00000000000011d5 <+108>: xor %fs:0x28,%rdi

0x00000000000011de <+117>: je 0x11e5 <main+124>

0x00000000000011e0 <+119>: callq 0x1060 <__stack_chk_fail@plt>

0x00000000000011e5 <+124>: leaveq

0x00000000000011e6 <+125>: retq

End of assembler dump.

Getting older, huh?

Getting older, huh? I have experienced some things that were extraordinarily meaningful in the year 2020: I started to write academic papers in English, pursuing perfectly-typeset articles and I have done some results that I’m now still very proud of. I started to browse English websites every day, hankering for an ideal & beautiful country to live in and work in. I went to see the sea for the first time on June 3, 2020, with my roomie Wang Yichuan, with whom I had a few conflicts but also indelible time together doing mathematical modeling and cooking. I started to notice that I was really a jerk and I had done a few unkind things which I took for granted, I wished to go to the church and confess my sins. I became more sensitive, sweet, and understanding. I found my goal again which is to continue my studies as I really enjoyed a lot the whole process when I was doing rigorous research. I never have had so strong a desire to study, and I wish to go to Germany to pursue my academic career!

Why Write a Thesis?

(I found some very insightful and enlightening words about why we should write a thesis.)

The answer “Because it’s required” is not good enough. In fact, the question “Why write a thesis?” is itself misleading, because it implies that what’s most important is the final product: an object that you will print out on acid-free paper, pinch into a spring binder, and hand in.

The more useful question is, “What am I going to get out of this experience?” This question foregrounds the fact that thesis-writing is a process, and that the purpose of that process is not only to produce a great thesis, but even more importantly, to transform you into a better writer, researcher, and most of all, thinker.

As you envision, research, structure, write, and rewrite your thesis, you will encounter complex and important questions, grapple with unwieldy and sometimes overwhelming data, listen in new ways to ongoing

scholarly conversations, confront challenging intellectual puzzles, and struggle to form and articulate your own thoughts. These trials will change you. If you trust and commit to the process, you will emerge at the end of your senior year with new skills and a better sense of your own voice. And as a more powerful writer and thinker, you will be more effective in all your post-graduation pursuits.

In order to achieve the most important goal of self-transformation, a student must aim, paradoxically, for another goal: creating new scholarly knowledge. Imagine that you are trying to spear a fish in a pond.

If you aim your spear at the spot where you see the fish, you will miss, because the surface of the water refracts light. Similarly, if you aim only to transform yourself into a better writer, researcher, and thinker,

you will miss both that goal and the goal of producing high-quality scholarship. You must endeavor, with every ounce of intelligence and strength you have, to produce an original and valuable academic

argument. As you do so, you will transform—inevitably. Aim for the tangible goal of writing a superb thesis, and you will reach the more important but elusive objective beyond it.

The process of writing a thesis can be a glorious adventure. I hope that you will experience the exhilaration of watching your ideas emerge, the astonishment of discovering newly developed abilities, and the satisfaction of completing an arduous but important journey. Now is the time to take your first step.

–Robin Bernstein, Assistant Professor of Women, Gender, and Sexuality and of History and Literature

The Godfather

A man who doesn’t spend time with his family can never be a real man. – Vito Corleone