This week and last weekend I was primarily focusing on memory pools, allocators, CPU caches. I now probably know how allocators work, simply separating memory allocation & objects initialization, for example, reserve() function in std::vector, it doesn’t initialize the allocated data segment, thus permitting better performance when we don’t need initialize the data immediately.

CPU cache is another very interesting & important aspect that can (hugely) influence the performance of our programs, as it’s way faster than main memory (RAM). Smaller is faster. A great resource I found on Tuesday evening was a YouTube video – Scott Meyers’s presentation on code::drive conference 2014, a very humorous & high-quality speech!! Really awesome!

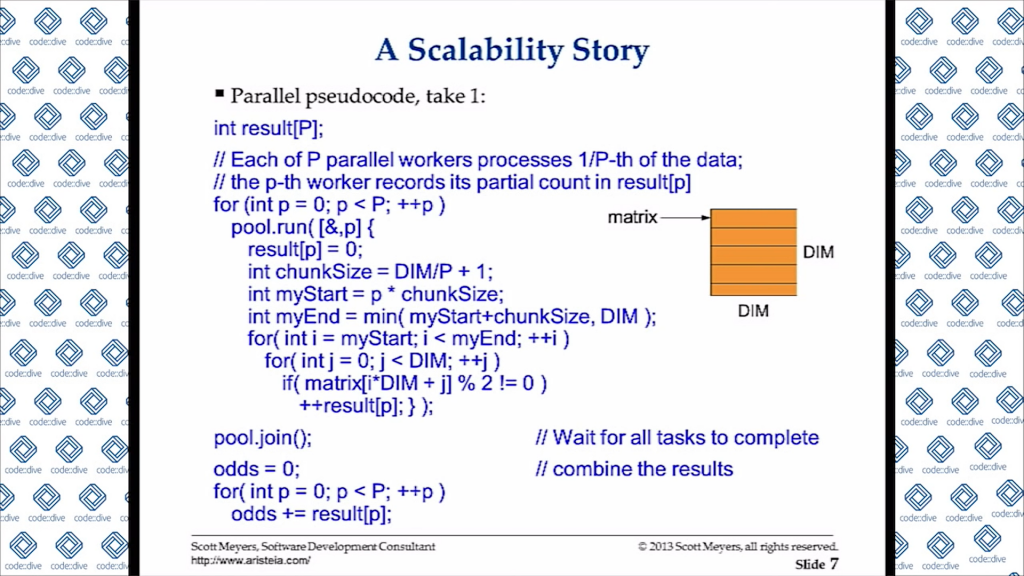

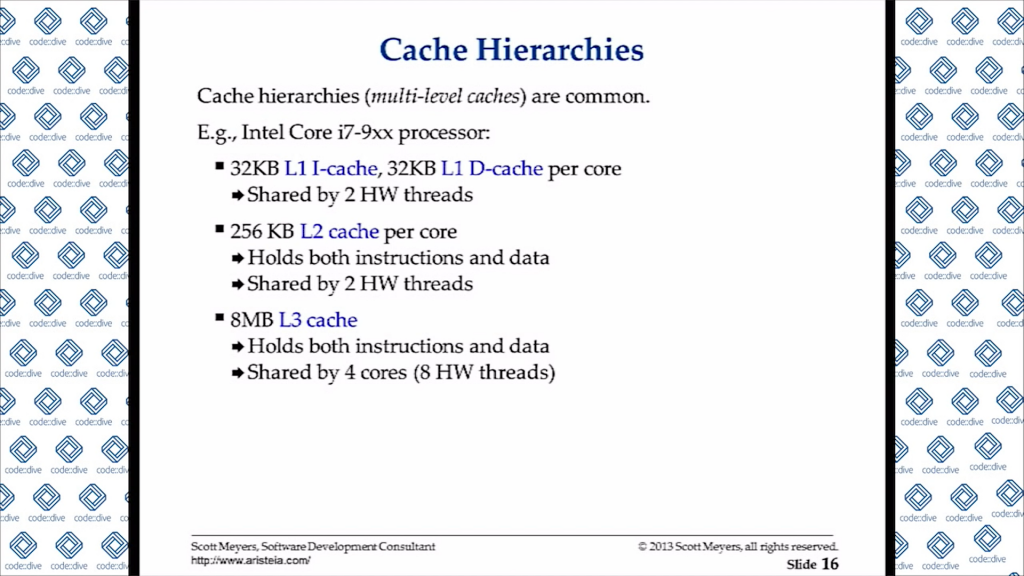

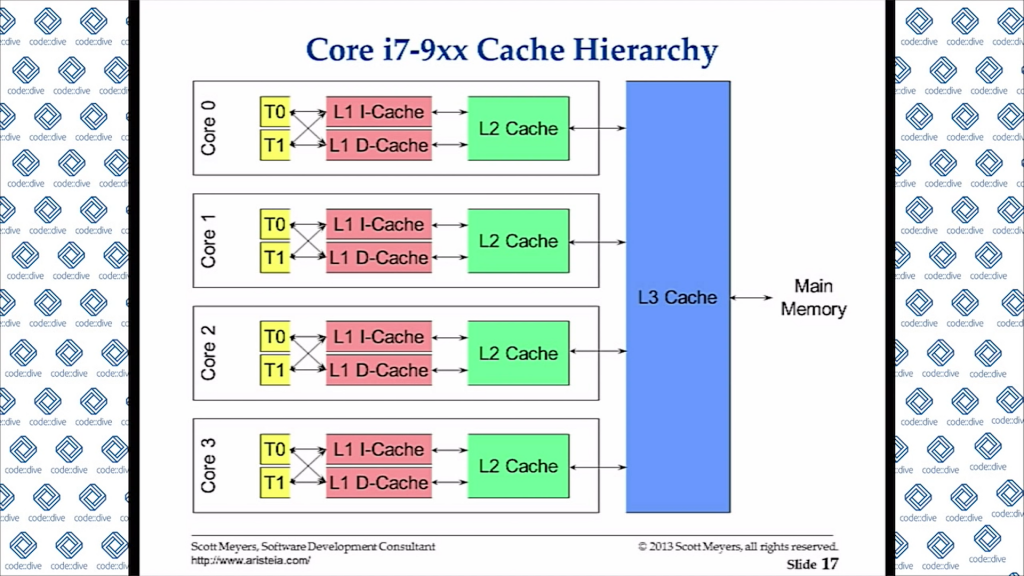

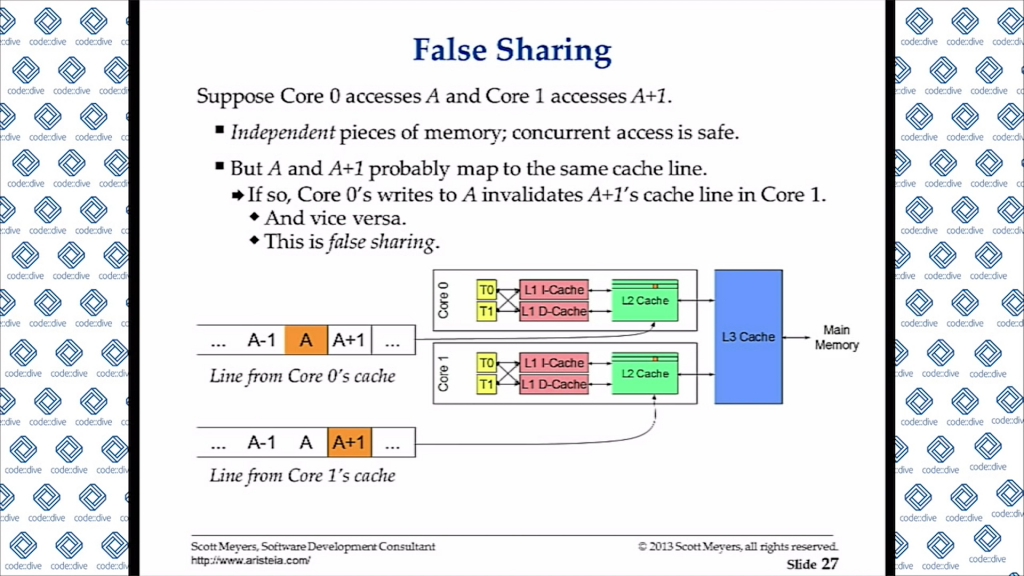

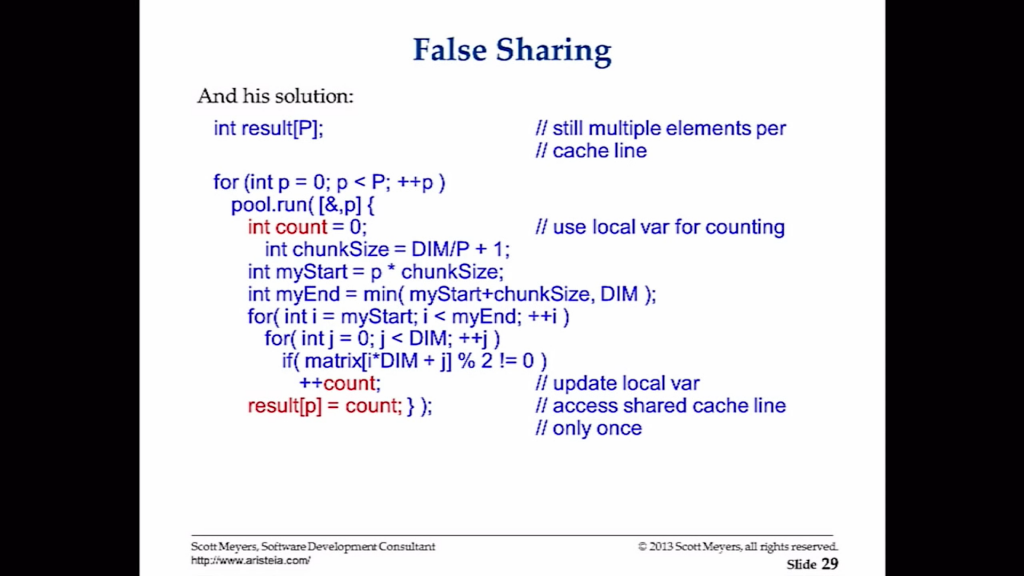

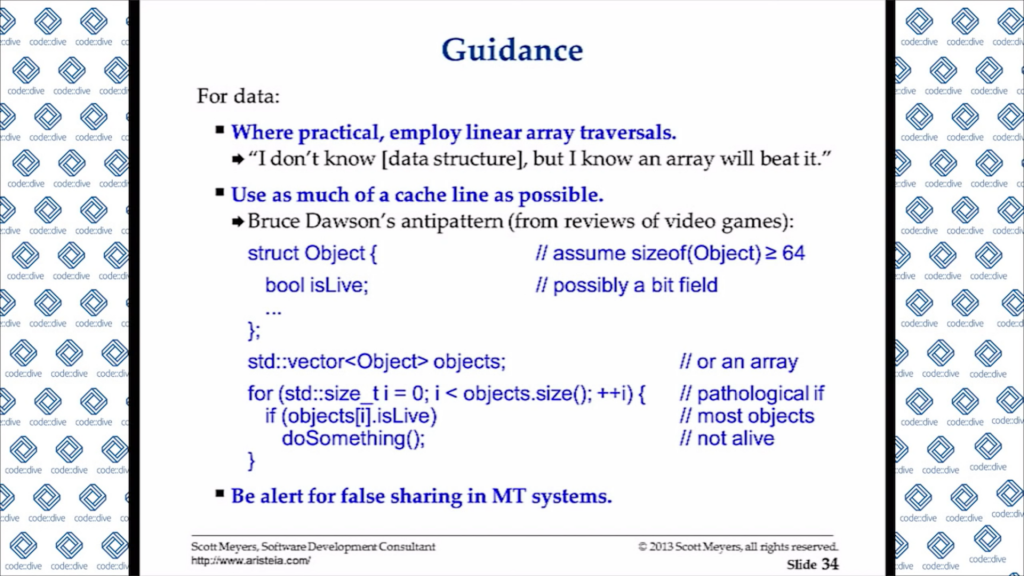

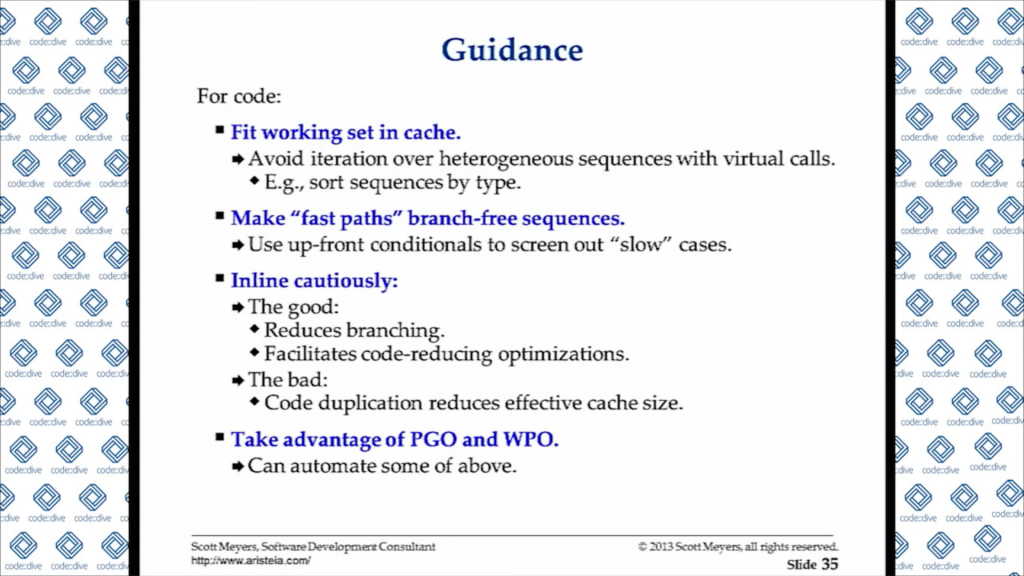

Here are a few slides screenshots, the complete presentation pdf version can be downloaded from here.

There are some interesting tops contained within it: cache lines, false sharing, data-oriented design, etc. Very practical and constructive.

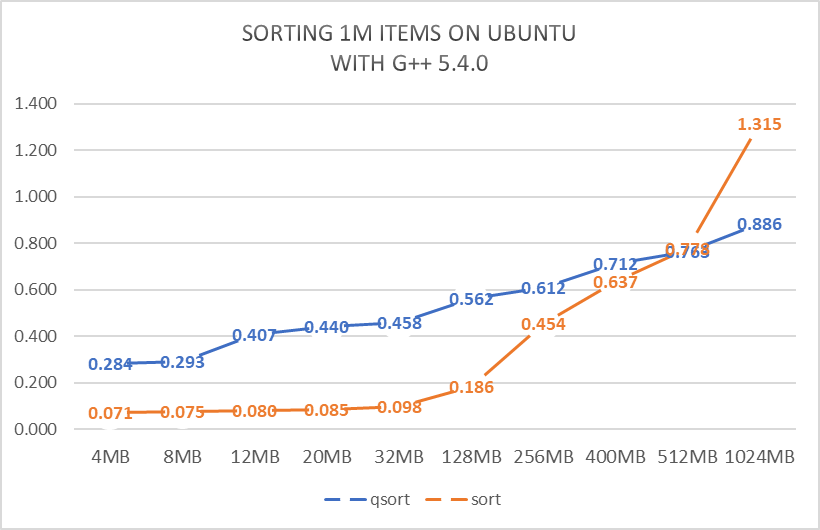

From Wednesday and later, I reviewed my qsort vs std::sort problem in terms of cpu cache and cache lines. I found a hug bug (typo) in my qsort function call (embarrassed). Then I further developed that, added a few more tests (for instance, indirect sort), and drew some charts. The details can be found here.

Supplemented on Nov 24, 2020

About cache associativity: see https://www.youtube.com/watch?v=UCK-0fCchmY, a very detailed & intuitive demonstration.

Additional resource: Gallery of Processor Cache Effects by Igor Ostrovsky.